Serendip is an independent site partnering with faculty at multiple colleges and universities around the world. Happy exploring!

We Are The Robots

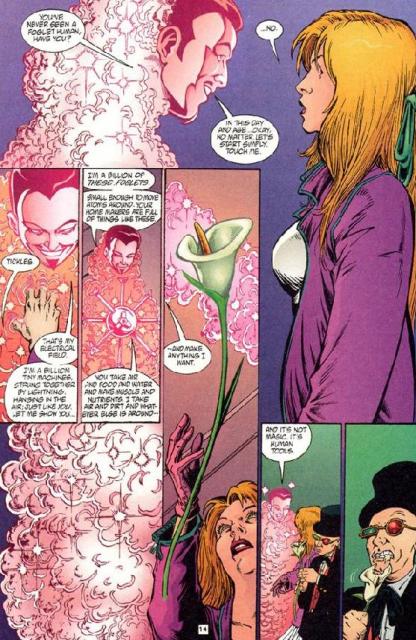

A page from Transmetropolitan, by Warren Ellis

Normal 0 false false false EN-US X-NONE X-NONE

The idea of a future in which humans interact with intelligent technology, or integrate such technology into themselves, fascinates and threatens authors and thinkers throughout science fiction and beyond. Some rejoice in the idea of the evolution of a post-human race of cyborgs, expecting an end to all mortal human suffering or calling it the next step in the evolution of the human race. Many others foresee the development of a race of intelligent computers or robots who compete with humankind for control of the Earth, or worry about the dehumanization of becoming part machine.

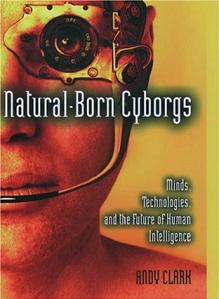

Andy Clark, in his book Natural-Born Cyborgs: Minds, Technologies, and the Future of Human Intelligence (note: link only works in Internet Explorer), doesn’t think that far ahead. There are no sentient machines or post-humans in his writing, no battle for the Earth and no cybernetic solution to humanity’s problems. In fact, Clark rejects posthumanism, calling it “dangerous and mistaken” and claiming that “the deepest and most profound of our potential biotechnological mergers…reflect nothing so much as their thoroughly human source” (Clark 6).

Andy Clark, in his book Natural-Born Cyborgs: Minds, Technologies, and the Future of Human Intelligence (note: link only works in Internet Explorer), doesn’t think that far ahead. There are no sentient machines or post-humans in his writing, no battle for the Earth and no cybernetic solution to humanity’s problems. In fact, Clark rejects posthumanism, calling it “dangerous and mistaken” and claiming that “the deepest and most profound of our potential biotechnological mergers…reflect nothing so much as their thoroughly human source” (Clark 6).

By Clark’s reasoning, if cyborgs are “human-technology symbionts: thinking and reasoning systems whose minds and selves are spread across biological brain and nonbiological circuitry” (Clark 14), then almost any nonbiological tool that extends a human’s “natural” abilities puts its user into this category. Consider the Walt Whitman Archive we looked at earlier this semester: Ed Folsom hailed it as a way to bypass the “rigidity…of our categorical systems” (Folsom 1) by preserving the many intricate connections between Whitman’s various journals, poems and other writing. According to Folsom, the Whitman database destroys the notion of a single authoritative version of any one of Whitman’s works, or of a single coherent narrative of his life.

Although the database (like any other catalogue) is limited by its creators’ ability to represent information and connections within it, the Whitman Archive serves to help us ![]() think about Whitman in a way that would be more difficult without it. The Archive, then, in addition to its massive usefulness as a provider of free information, is a tool to help us think more rhizomically. It stores information and connections that humans are capable of understanding themselves, but find it difficult to hold in their own heads all at once. The database—as well as its relatives the commonplace book, the scholarly archive, and even the shopping list—seems to function as an external hard drive for the brain, a sort of Pensieve to hold for safekeeping nonessential information and complicated rhizomic connections, with easy access at any time the user(s) care to explore it.

think about Whitman in a way that would be more difficult without it. The Archive, then, in addition to its massive usefulness as a provider of free information, is a tool to help us think more rhizomically. It stores information and connections that humans are capable of understanding themselves, but find it difficult to hold in their own heads all at once. The database—as well as its relatives the commonplace book, the scholarly archive, and even the shopping list—seems to function as an external hard drive for the brain, a sort of Pensieve to hold for safekeeping nonessential information and complicated rhizomic connections, with easy access at any time the user(s) care to explore it.

![]() Clark argues that in this sense, technology that would popularly be regarded as cyborg technology (including today's pacemakers and prostheses, but also the kind of futuristic enhancements predicted by post-humanists) is directly descended, and conceptually no different, from the use of other nonbiological tools to expand our brain function. He uses the example of a pen and paper used as an aid when doing complicated multiplication problems: in this process, the brain makes use of its ability to multiply small numbers, repeating this “simple pattern completion process” and storing the results outside itself “until the larger problem is solved” (Clark 6).

Clark argues that in this sense, technology that would popularly be regarded as cyborg technology (including today's pacemakers and prostheses, but also the kind of futuristic enhancements predicted by post-humanists) is directly descended, and conceptually no different, from the use of other nonbiological tools to expand our brain function. He uses the example of a pen and paper used as an aid when doing complicated multiplication problems: in this process, the brain makes use of its ability to multiply small numbers, repeating this “simple pattern completion process” and storing the results outside itself “until the larger problem is solved” (Clark 6).

According to Clark, this tendency to integrate tools into its problem-solving systems—very often so completely that we are not able to perform certain tasks without the aid of relevant tools, as in the example of large-number multiplication or telecommunication—is a large part of what makes the human brain so powerful and so distinct from the brains of other animals. Humans, Clark claims, are “natural-born cyborgs” (Clark 6): we have always incorporated non-biological systems into our solutions and selves. He sees the future’s tiny skull-implant telephones as the continuation of a line of improvement that began with the invention of shouting and was followed by telephones and cell phones, rather than a new step in the evolution of the human race.

Clark maintains that there is no isolatable ‘user’ of all these tools—the pen and paper used to store information outside the brain are tools only in the “thin and ultimately paradoxical” (Clark 7) sense that the various parts of one’s brain are tools. Normal 0 false false false EN-US X-NONE X-NONE MicrosoftInternetExplorer4 One reviewer sees this as a “nullification of the self,” a declaration that humankind is “nothing special after all.” Clark does use the phrase “there is no self,” defining “self” very specifically as “some central cognitive essence that makes me who and what I am” (Clark 138) Self, Clark argues, is defined by the various tools and methods we use—from the various parts of our brains to our useful bodies all the way out to all of the nonbiological elements we integrate into ourselves. Clark calls this “the ‘soft self’: a rough-and-tumble, control-sharing coalition of processes—some neural, some bodily, some technological” (Clark 138).

However, Clark is by no means stating that humankind is meaningless or ordinary, and his book is not, as the reviewer suggests, a “rejection of all that is transcendent in man.” In fact, Clark celebrates the amazing possibilities of a brain that can so handily use its environment to enhance itself. It seems more likely that his point in bringing forth the concept of the “soft self” was that the integration of machine parts even into one’s own body should not fundamentally alter one’s sense of self, since any advantage given by nonbiological tools already alters the self in the same way.

This conclusion seems to reject the image of developing technology as a threat which is reflected in so much science fiction. Though Clark claims to reject posthumanism, his ideas seem to me to point at that very school of thought, and make me want to investigate it further. The integration of technology into human systems has been going on for centuries, yes, but our technology improves at an ever faster rate. Is Clark’s understanding of the self incompatible with the idea of a next stage of human evolution, in which technological improvement speeding up so much that the human race turns into something different enough to be called something other than human? Does the idea of a "soft self" preclude the division of a developing evolutionary chain into separate species? And does it, as Clark's reviewer suggests, somehow despiritualize or devalue the human experience?

Comments

Rhizomes and Cyborgs: Thinking differently about the self

jrf--

I want to commend you, first, on your exploitation of the resources of the internet in the construction of your paper: not only the images you use to draw us in, and to punctuate your commentary, but also your use of active links throughout the paper, which lead us beyond what you have written, and into some of the sources that have informed your thinking. I'd like to explore a little more w/ you how best to use such links, and how you understand their function: do you see them as activated footnotes, which lead us into the multiple layers underlying, and supporting, the surface of this project? (You also seem to recognize the limits of linking, which doesn't always operate equally well on all platforms....is that a puzzle you'd like to understand better?)

Also of particular delight to me is your seeing the connections between the material we read @ the beginning of the class, about the "rhizomic" quality of internet poetry archives, with Clark's notion of the "cyborg" as simply a (now speeded-up!) expression of the "human ability to integrate outside tools into our problem-solving systems." I might nudge you (if you are interested) to go on thinking in this direction: what particular (intellectual? literary?) activities are enabled by these very particular post-human/human-enhancing versions of software and open source?

I'm curious, too, to understand better how you yourself feel and think about Clark's insights. You cite, several times, "one reviewer" who is fretting about the "nullification of the self" implied by Clark's views of human-technology intersections. You seem @ first to reject the image of developing technology as a threat, not "despiritualizing" or "devaluing" human experience, but rather precisely the opposite: "celebrating the amazing possibilities of the human" (or, as Shayna says in her comment, "no tool can be incompatible with a human being because it is made precisely to augment something we already have"). But then you seem to reverse direction (?), questioning whether the increased rate of change alone might make more difference--and a more troubling difference?--than you had first thought. Self as "soft," self as "altered," okay, but self as...something that is not self? "The human race turns into something different enough to be called something other than human"? A "separate species"?

I'm thinking now of the curiously analogous (is it?) notion of copyright law--guided by very clear definitions of "how much newness" needs to be incorporated to earn a patent. Is that the sort of question you are teasing @ the end: how much evolution would make us into something we can no longer recognize as human, as "ourselves"? Why might that sort of "originality" be troubling? Would it be more troubling to you than its lack, which has also bedeviled our conversations this semester? Is this a place where understanding more about the stages in the history of human evolution might be of use?

It may also interest you to know that Wai Chee Dimock, who will be coming to visit our class after break, has invited some of us Bryn Mawr faculty to participate in her "Rethinking World Literature" Facebook site, where we have also been discussing the "posthuman," and where Paul Grobstein has offered a definition similar to yours (and Clark's), something he calls the "human inanimate":

"Most of the history of humanity involves the creation of physical artifacts that reflect and extend the capabilities of human beings (axes, arrow points, cooking pots, decorations, books, airplanes, dwellings, cities, libraries etc)....We are certainly doing more of this than we did thousands of years ago, but I see that as continuous with the evolution of humanity rather than 'post human.'"

P.S. Also be sure to check out sgb90's take on Andy Clark's work, in "Expansion of Self Through New Technologies."

Clark and Hobbes with a Side of Speculation

Much of Clark's argument for using tools as the integration of human and technology reminds me of Hobbes' views of human beings. Hobbes perceived humans as nothing more or less than machines. Free will would be explained as a function of our systems. Tools, then, would be nothing more than extensions of our bodies' mechanics. Combing these two theories would make something akin to "technology can be said to be integrating with humans when a person writes words on a piece of paper because the pencil is a continuation of our communication systems." Would this mean that no tool can be incompatible with a human being because it is made precisely to augment something we already have? Taken this way, a faster rate of technological advancement might not matter, because the technology would reflect the systems we, as humans, have. The human experience could not be devalued through technology because technology is constructed from human experience.