Remote Ready Biology Learning Activities has 50 remote-ready activities, which work for either your classroom or remote teaching.

Serendip is an independent site partnering with faculty at multiple colleges and universities around the world. Happy exploring!

| Emergent Systems 2004-2005 Forum |

Comments are posted in the order in which they are received, with earlier postings appearing first below on this page. To see the latest postings, click on "Go to last comment" below.

| a new year ... Name: Paul Grobstein Date: 2004-09-04 13:33:46 Link to this Comment: 10767 |

Looking forward to where we go this year (and to the promised coffee and muffins).

| Douglas Adams and emergent perspectives Name: Doug Blank Date: 2004-09-08 11:12:36 Link to this Comment: 10801 |

Name: Paul Grobstein Date: 2004-09-11 12:04:03 Link to this Comment: 10820 |

What particularly struck me was the emerging sense (for me at least) that there is something both fundamental and not-yet-fully-recognized-understood about "irreversibility". This goes back (again, for me at least) to the issue of whether the "block" model of time is the appropriate way to think about change in general, and hence to the determinacy/indeterminacy issue we've repeatedly touched on. My sense is that the preferences of many (but not all) physicists (and others who either have the same preferences or feel compelled to model themselves on those) notwithstanding, there really IS a necessity to accept the "reality" of irreversibility (and hence of indeterminacy).

The key here (again "for me at least") is Al's pointing out that there really is NOT a way to make the second law of thermodynamics compatible with deterministic physics (the secret "coarse graining") and, further, that there is an unavoidable irreversibility inherent in quantum mechanics as a descriptor of actual observations (different wave functions can collapse to the same observations, hence one cannot, from the observations, go back uniquely to the wave function).

Some (related?) things that struck me. The "arrow of time" would be much easier to make sense of if we accepted that "time" was, as in emergent systems models, iterative change with an indeterminate element. And it may be useful in the future to equate "indeterminacy" with "irreversibility" in the sense of inability to specify a unique antecedent for any given state. And that in turn builds what may be an important bridge between energy/matter and "information". Physics (at least "classical" physics) is the effort to understand matter/energy in terms that are relatively independent of its form/organization at any given time ... "information" is that which has been left out in such an analysis (as both Chomsky and Shannon left out "meaning"?), aspects of form/organization of matter/energy that reflect/relate to irreversible change?

| Anal teacher as entomologist Name: Jan Date: 2004-09-16 11:54:05 Link to this Comment: 10853 |

| Conscious autamata hypothesis Name: Wil Frankl Date: 2004-09-23 09:32:01 Link to this Comment: 10927 |

| Zombies?! Name: Doug Blank Date: 2004-09-23 11:29:34 Link to this Comment: 10930 |

Both Paul and Rob seemed to imply this morning that it is logically possible (and maybe has even been seen in the world) that people can have their consciousness removed, and go about their entire lives without knowing it. This is what philosophers of mind would call a "zombie".

Can you explain how you see this as being logically possible?

| zombies Name: Jan Date: 2004-09-23 14:39:22 Link to this Comment: 10936 |

I think what they're talking about is simply not setting off "consciousness" as a category, thus avoiding the problem that reductive materialist accounts of the mind have in explaining how consciousness might emerge from a purely physical system or how any explanation of consciousness can be reduced to physical terms.

Of course, it does appear that large numbers of zombies appear to have elected U.S. presidents.

| matters arising ... Name: Paul Grobstein Date: 2004-09-23 19:00:14 Link to this Comment: 10937 |

The new Serendip exhibit I mentioned as related to Rob's earlier Descartes plus exhibit (highly recommended re today's conversation) is Writing Descartes: I Am and I Think, Therefore .... There's an on-line forum there too, and because this morning's discussion triggered thoughts that seemed germane, I posted them there. I think they're relevant here too and will trust any one interested will prefer to follow the link rather than to have me repeat the (typically a bit long) posting here.

I'm not sure this will answer the question of "logically possible", since I regard the question as an experimental one rather than a "logical" or "semantic" one, and "live their entire lives" may be a bit of a stretch, and the relevant observations depend on what (as Rob said) is a currently not achievable: a criterion by which to definitively decide whether another person is conscious (or not so). With all of those caveats, here briefly is what I was referring to this am ...

People report acting without being "conscious" under a large variety of circumstances, trivially as when has one's attention called to some input of which they were previously unaware (as Rob illustrated), or when one realizes that one has gotten to a particular place and has no memory of how one got there, and more dramatically when told one did A,B, or (your choice) while drunk. Comparable dissociations to varying degrees between action and consciousness

occur in a variety of clinical syndromes, and are particularly characteristic of damage to or affecting a particular part of the brain, the neocortex. Perhaps the most obvious normal dissociation occurs in sleep, where the body (and nervous system) is actually quite active but most people report that at most times they were not "conscious". During this period, the neocortex displays a characteristic form of electrical activity (a "synchronized EEG") that is quite different both from the awake (conscious) state and dreaming (arguably a state when one is "conscious" but not normally responsive to external signals). Even more dramatic is sleep-waking, which I trust everyone will regard as "action". Sleep-walkers report no "consciousness" while sleep-walking and the EEG pattern correlates with this (ie is "synchronized", as in other sleep states).

If we take people at their word about of what and when they are "conscious", the observations are consistent with the notion that "consciousness" corresponds to particular kinds of activity in a particular part of the brain, the neocortex. It then becomes relevant that every once in a while, a human being turns up in a doctor's office, is examined for one or another complaint, and proves to have little or no neocortex.

Ergo, with caveats mentioned at the outset: we are all zombies in some/many ways some/most of the time (which in turn says, in relation to the "logical" problem?, that "consciousness", whatever it does, is not necessary for complex adaptive behavior). Some people are more zombies more of the time. And there may exist people who are all zombies all of the time.

| serendipidously: surplus meaning Name: Anne Dalke Date: 2004-10-01 00:48:31 Link to this Comment: 10999 |

Rob--

Another rich, rich presentation this morning, for which many, many thanks (doubled because you gave us two of these). And which generated--for me--a number of questions. Which I record here, for myself, but also in the hopes that you'll bite, give me some (more?) answers.

Your presentation stirred up three lines of thinking for me; I've organized them here from most to least stuck-ness.

1. I got stuck (tried to get an answer then; didn't; am trying here again) on your slide about "extensional reductionism." I heard this as another stab @ what you'd identified, last week, as the Cartesian Impasse, aka Mind/Body Problem, in which the relationship (more precisely, how to negotiate the relationship) between two different things--mental and physical--continues to bedevil psychologists. I heard Paul offer an alternative formulation on the Descartes forum: that those "two things" might more productively be characterized as "two different forms of organized matter." I liked the way that formulation got Rene/you/us out of the pickle of understanding how non-material mind and material reality can influence one another. But this morning, when I heard you re-represent the encounter between "brain/mind and its environment," I thought you were getting us (or at least you were getting me!) stuck again, in a "relationship" between "mental" and "physical" that could not be "negotiated" (with every pun here intended).

2. Less stuck, more struck: Your answer to the question of where the Iliad resides--in the interaction between the sequence of words and the decoder/reader--is the central idea of a long-important methodology in literary studies known as reader-response theory, which fits quite nicely into the notion of meaning-as-relation: textual meaning is constructed in the interaction between the writing and the reading; it comes into existence when the text is read.

When I evoked this process during this summer's conversation about Information, I wasn't sure whether I'd talked myself out of or into a hole. If it was the latter, I think you've just talked me back out of it: your description of "surplus meaning" (neural states being extensionally but not intensively equivalent to perception: to "see" a cup "means" more than what an account of the state of the neural networks describes) is an account, in my terms/ landscape, of the process of literary interpretation, in which any "text" always exceeds the grasp of any "interpretation," any "reduction," any story of what it is/does. This is pure Derrida: the original is always deferred - never to be grasped.

3. Least stuck, real-est question (aka what I really want to know): Where I got most excited, this morning, was when you used G.H. Lewes to show that, from the very beginning, emergence created a problem for the nature of knowing: Because effects are emergent, deduction is insecure. And because effects are emergent, prediction is not reliable. We can't go back (because the loss of information in arriving at "meaning" is not recuperable?) and we can't predictably go forward (because of the complexity of the interactions?). My question is whether, in the universe you've just traced for us, the unpredictability of the future and irreducibility/irreversibility of the present (the inability to reduce a cause to its effects, to play the tape predictably backwards) are the same thing. Do not being predictably predictive and not being reliably deductable arise from the same cause, for the same reason?

Serendipidously (was this really serendipidous?) a new story has just appeared on the Descartes exhibit, in which the narrator

watches her storytelling mind begin to fan the spark of annoyance into a flame [and says] god damn-it I am not letting myself react to whatever is getting triggered by my neural-associations.

| Rob 2 ... Name: Paul Grobstein Date: 2004-10-05 12:32:37 Link to this Comment: 11024 |

Among the things that struck me as most important in our conversation last week was Rob's effort to carefully distinguish between "emergent" with reference to science and to "nature". Lewes, if I understand it correctly, wanted to call something "emergent" if "it cannot be reduced either to the sum ... or ... difference" of measurements of interacting components. This is clearly a matter of "science" rather than of "nature", in the sense that it makes the limitations of human analytic procedures the criterion for "emergence". With advances in analytic tools (calculus, non-linear dynamics, computers) things that were previously "emergent" cease to be so by this definition. And, by this definition, "emergence" might in principle disapear entirely as a category (like god? like mind?).

Needless to say, I'm not comfortable with such a "human perspective defined" view of "emergence" and, fortunately, I don't think anyone else has to be either. The touchstone here for me is something I wrote about years ago, the notion that the properties of elements at one level of organization permit, but do not determine, the properties at some higher level of organization. The "emergent" properties thus depend on an "addition of information", most typically from outside the system. A trivial example is water molecules; the emergent property of gas/liquid/solid results from the properties of water molecules TOGETHER with the additional information of the temperature of their surroundings. "Stigmergy" is a slightly more elaborate version of the same thing. Ants behave differently not because the properties of the elements have changed but because the collective behavior of the elements has created a new (and/or potentially constantly changing) information source. This definition of "emergence' as involving "information addition" escapes the problem of being defined by the analytic limits of humans but introduces some new problems including how to define what is "inside" and what is "outside" the system, and what one means by "information". It also neglects two features which are prominent in biological (and cosmological) evolution: changes in properties of the elements and the association of elements into new combinations that themselves exhibit "emergent" properties.

And that brings us to Rob's "epistemological emergence" and, in one more step, to Anne's "unpredictability of the future and irreducibility of the present". If one introduces into the properties of any of the interacting elements or into the interactions between them a degree of genuine indeterminacy, then the past becomes to some degree unrecoverable (several different states could have given rise to the present one) AND the future becomes to some degree unpredictable. And if one now allows such effects to propagate and amalgamate over billions of years and over successive rounds of the creations out of interactions of new assemblies which themselves become the foundation of assemblies .... I assert that there is a genuine and profound capability of emergence to create new things, "new" not only in the sense of surprising to a particular generation of investigators, indeed not only "new" in the sense of surprising to humans but "new" in the universe: over time, assemblies of matter can come into existence that have properties arbitrarily distant from any previously existing ones however one chooses to measure them (and whether anyone is there to measure them or not).

The brain/mind? What's its relation, and the relation of "discourse" to all of this? Most obviously, the brain is an "emergent", something that came into existence but could not have been predicted to do so nor be back-traced with any certainty. Perhaps most importantly though, the brain/mind (as it exists in humans) is a powerful amplifier of the novelty-generating emergence process. By combining a degree of indeterminacy, a capability to generate abstractions (stories), an ability to conceive counter-factuals, and an ability to exchange both stories and counterfactuals (engage in "discourse"), the brain greatly increases the range and rate of explorations of achievable forms of organized matter, an exploration that has been going on since the big bang (and would, whether the human brain/mind was around or not).

All of this may sound a bit like nothing more than a return to From the Active Inanimate to Models to Stories to Agency, and in some sense it is. But I think there has been a lot of needed filling in/clarification along the way. Both in our discussions and elsewhere. Among the latter is a new set of understandings about the nature of information, and a recognition that some architectures have important properties that others don't. The former says that information processing in general involves both loss and gain of information (relevant for "surplus meaning"?); the latter that bilayer networks can represent "group purpose" and so compare a measure of "group product" with "group purpose" in a way that single layter networks cannot.

| Where's the Cut? Name: Wil Frankl Date: 2004-10-07 09:33:26 Link to this Comment: 11042 |

| Rob 3, or cut, cut, whose got the cut ? Name: Paul Grobstein Date: 2004-10-07 17:03:40 Link to this Comment: 11048 |

There ARE "two things". One is the unconscious, a set of brain activities of which one is for the most part unaware but which, as Rob said, has enormously sophisticated capabilities. The other is a second set of brain activities which uses the first as its input and which constitutes internal experience, all that of which we are aware. It (as per Rob, there may be several layers of "it" which for some purposes can/should be distinguished but I don't think that is critical here) is not a replica of the unconscious but rather an abstraction of it, a "compression" of it, a "story" about it, less useful for some purposes, more useful for others. Among the latter is the capability to entertain Tim's counterfactuals (both about "self" and about other things), and so to give organisms which possess a "bipartite" brain organization the capability to play a role in their own ongoing emergence (as well as that of things around them).

Let's pause here to add into the skein Wil's concern, which is related in turn to Anne's interest in clarifying "interpretation" (literary and otherwise). What's important about Rob's martian (or the color blind neurobiologist) is (thanks, Mark) not ONLY the issue of whether there is a perfect identity between physically observable brain states and internal experience but ALSO the separate issue (usually entangled with the first but needing to be disentangled) of whether a description of a brain state FROM THE OUTSIDE will give the outsider the internal experience associated with the original brain state. I, for one, am quite convinced by available observations (though, of course, never definitive) that the first is the case, there is an absolute identity between brain state and internal experience. I am equally convinced, for the same reason (and with the same cautions), that the second is NOT the case. No description of the state of the brain, no matter how complete, will provide an observer with the "experience" the observed state supports. This can be had only be BEING that nervous system in that state.

So, re Wil ... reductionism is fine, so far as it goes. An exact clone of me (or anyone else) will, at the instant of coming into existence, be experiencing exactly what the cloned entity was experiencing (and, yes, the two will drift apart over time). BUT no external observer will be able to say with any degree of certainty what the internal experience of EITHER of them is (other than that they are instantaneously identical). An external observer may (and will), as Tim says, presume a person (clone or original) HAS an internal experience, guess at it, model it, revise the model based on new observations (which may include measures of brain activity) but what they will inevitably have is a story about how an external experience is created and a more or less good guess of what it is in any particular case. The internal experience itself is deeply and fundamentally "private", achievable only be BEING that neuronal ensemble and state. There is no further decoding/recoding of physical states that will get one any closer to the experience itself.

BUT, there is a clear place in this scheme for "interpretation", literary and otherwise (recognizing that these interpretations are themselves also brain states). If one's concern is to try and understand internal experiences, either in and of themselves or because one recognizes that they play a causal role in other things one is interested in (which, with no mystery whatsoever, they do; they are physical states and so can influence physical states, both their own and others), then one has no choice but to engage in "interpretation", the creation of models (in one's own brain) of the internal experience of someone else and the further refinement of such models based on additional observations (of them, or of their artifacts). And this in turn may be worth keeping in mind in talking about the Two Cultures. "Humanities" originated in the exploration of humans, and has had trying to understand human "experience" at its core. "Science" originated in the exploration of things outside humans, things which seemed (and for the most part still seem) to lack "internal experience". As science began/continues to take humans into its ken, its methods have necessarily changed to admit the need to acknowledge a significant role for internal experience. Conversely, as humanities/social sciences became/are becoming increasingly aware of how little internal experience actually has to do with human behavior/creation it too is evolving . The upshot is a significant and progressively overlapping domain of observations/approaches (as well as, of course, a lot of screaming and turf battling).

Not bad for a morning's work, not bad at all. But there's more. As Al said, in going from the description of the entire brain state to the internal experience (or to the interpretation of it) there is a "compression", a recoding of the original state into a new form whose properties depend to some extent on the coder. And in going from that to a new brain state (in another brain, or perhaps in the same brain) there is an expansion, a decoding that also depends on another element (the decoder). So, what is transmitted from one brain to another (and probably between different parts of one brain) is not "the state" but rather an altered/impoverished "representation" of the state. Both Shannon and Chomsky focused on how this representation is structured, given rise to the notion of "information" and "syntax" without "meaning" and "semantics". And it is true that bit strings and utterances, in isolation, lack meaning/semantics. And it is true that "meaning" is not transmitted in isolated bit strings and utterances. But "meaning" DOES exist in what gives rise to the isolated bit strings and utterances (one brain, or one part of one brain) and is created anew in another brain (or part of the brain) on receipt of the bit string/utterance. Signals do not themselves convey meaning, but a transmitter may intend meaning in creating a signal and a receiver may infer/create meaning from the signal.

What all this suggests, if this isn't enough already, is that "meaning" exists only insofar as there are brains (or other entities with comparable architectural complexity) to create it. And that "meaning" depends on "information" which in turn depends on compression/expansion processes that are largely "irreversible". So, further filling out From the Active Inanimate ..., irreversibility and information have been around for a VERY long time, adaptability reflecting progressive compression/expansion cycles built on it to yield model makers a pretty long time ago, and story telling/counterfactuals/meaning is a quite recent development rooted in all the preceding. And along with THAT, still more recently (a LOT so?) came the possibility of a distinction between "nature" and "science", which itself is nothing more (and nothing less) than an acknowledgement that conscious story is always a compression reflecting, among other things, properties of the compressor (Doug's "Introspection is the work of the devil" may or may not be true, but those who deny either the existence of consciousness or the need to take it seriously are .... model builders). Given that "science" is a human story and hence, necessarily, a part of "nature", I'm a little reluctant to make a "science"/"nature" distinction but agree it is sometimes useful to make explicit that there have been and will always be "scientific stories" that are discarded because they prove to have been created from too narrowly "human" a perspective.

| Why the Martian example leaves me cold Name: Ted Wong Date: 2004-10-07 19:22:49 Link to this Comment: 11049 |

The Martian will have a lot of data to sort, but we're assuming that it's smart enough to handle it. The Martian will still never have been Paul -- all the Martian has is knowledge of the states of Paul's brain when Paul is experiencing all those things -- and an understanding of what brain states are associated with other brain states. It'll see that the state for seeing the cup is associated with the state for thirst, for wine, for happiness, for poverty, for all sorts of things which in turn have their own associations -- with different strengths -- to states corresponding to other experiences.

Here's the thing: I'm not willing to say that experiencing seeing the cup is necessarily different from just having all the associations. Maybe Paul's internal experience is just an invoking of near-countless associations, which invoke others and so on in a rich but describable cascade. I can't say that it is that, but I don't think there have been any arguments that have gone beyond an assertion that internal experience must be different from knowing the states. I don't know the nature of my internal experience of the world, just like I don't know what it would mean to be able to understand all the brain states of Paul's world of cup-associations. I don't know, and I'm not ready to base arguments on either one.

And it seems to me that the whole problem is extrascientific anyway. I believe that it will eventually be possible to build a computer that passes the toughest Turing test -- no empirical difference between it and a reflexively conscious person, and so no way to falsify any hypothesis. Now, it's true that there are worthwhile intellectual endeavors which aren't scientific, but we should be clear about which of those things we're doing and what the criteria are for evaluating statements. I think we've been a bit uncareful so far. That, or I just haven't been hearing the caution. Either way, I'd ask that we be more specific about that sort of thing: which statements are falsifiable, and what do we do with statements that aren't.

| Not the final word: no surplus meaning Name: Anne Dalke Date: 2004-10-08 01:02:14 Link to this Comment: 11057 |

What I was trying to say, just at the end of this morning's session, was that if Rob was "right," last week, in describing the way in which emergence creates a problem for "knowing," because deduction is insecure, prediction is not reliable--that is, because the future is unpredictable and the present is not reduceable to the past (not just time, but the information transferred is irreversible)--THEN MEANING IS THE WAY WE TRY TO BRIDGE THE GAP. Meaning is thus in no sense "surplus"; it's the explanation, the story we "make up" to explain how we got from A to B, how we might have gotten to B from A.

That said, I would not say that if one's concern is to try and understand internal experiences...then one has no choice but to engage in "interpretation."

I was lying on a hillside yesterday (waiting for my daughter's track meet to start) and thinking how much happier I would be if I weren't constantly "engaging in interpretation," weren't constantly telling stories to make sense of--make meaning out of--my experiences. It's no easier for me than other members of our group to "stop thinking," but I think I DO have a choice not to. There are lots of religious practices which could help me in this process....I could master meditation, learn to distance myself from the throes of the everyday....

That said (I said that--theoretically--I could stop; I also said I have trouble stopping...) the claim that, in going from one brain state to another there is a compression, a recoding...[then] an expansion, a decoding...an altered/impoverished "representation" of the state seems to me an impoverished representation of what happens. (I actually made the same claim, above, when I said that any "text" always exceeds the grasp of any "interpretation," any "reduction," any story of what it is/does, so this is as much self- as other-correcting--but today I do think differently:) meaning can be "added" both in expansion (as in "let me expand on this...") AND in compression (think of how often you use an astute abstraction to "make meaning" of a range of seemingly random observations in a classroom--)

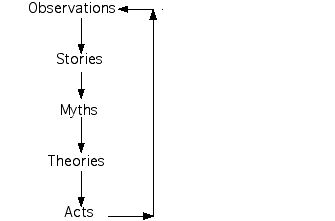

Now, as to whether any of this is "extrascientific" or "falsifiable"...."? Hm: seems we could run an experiment, along the lines of "whisper down the lane...."? Or think in terms of this figure (fuller explanation @ Science as Story: Re-reading the Fairy Tale), which doesn't image the interactions among brain-parts or brains, but does suggest, abstractly, some ways in which "added" meaning might accrue, be revised (subtracted?), re-articulated....

| In what way are emergent phenomena scientific? Name: Doug Blank Date: 2004-10-08 10:19:35 Link to this Comment: 11062 |

I agree with Paul that when we are trying to understand nature we run up against the limits of our selves. Of course we must be aware of those limits when formulating our stories/science.

But I very much appreciated the distinction that Rob made between making claims about nature and making claims about science, which I think is a different issue. One difference is that some limits of science are independent of nature. For example, we know that there are "things that are true" but "unprovable" in systems of sufficient complexity. That is a logical, provable limit (which may have nothing to do with nature, by the way). We can also have other scientific statements about scientific theories, and discuss their limits.

(I probably make the mistake Rob pointed out all the time: I blur statements about science and nature into one big mush. In my defense, though, as a "model builder" I construct my own nature with computer programs. Sometimes when we model builders talk about the science of a model, we mean the ideas behind it, as opposed to the actual implementation (the running program). But even here, it would be wise to make Rob's distinction.)

Now, what are statements about consciousness? Are they statements about nature, or science? Unless you believe that we absolutly have the science "correct" (and therfore can say something about nature), then they really must be statements about science---the story of consiousness.

To carry on from Ted's point: in what way can consciousness be a scientific idea at all? In what way can any emergent phenomenon be scientific? First, does anyone deny that consciousness in not an emergent phenomenon? To me, all emergent phenomena are "just" patterns, categories of organization. Not that they can't have properties, which in turn have effects. But maybe patterns, by their very definition, can't be addressed by reductionist scientific methods.

You could try to make a science based on Al's termites: how big will the pile get? how fast will it move? how stable is it? In fact, I'm reading a paper right now titled "Towards Performance Guarantees for Emergent Behavior", so people are interested in this. But this is science without a foundation, without lower-level axiomatic principles. Is this what A New Kind of Science is really about?

| economic decision making Name: Jan Date: 2004-10-19 15:42:36 Link to this Comment: 11136 |

| ontological irreversibility Name: Anne Dalke Date: 2004-10-21 09:57:17 Link to this Comment: 11164 |

I thanked Mark, after his provoking presentation this morning, for taking seriously my question about whether the predictability of the future and irreducibility/irreversibility of the present (the inability to reduce a cause to its effects, to play the tape predictably backwards) are the same thing. Though I do want to note, here, that I (think I) disagree w/ his answer: that indeterminacy going forward is a practical (epistemological) problem, while indeterminacy going backwards is ontological (inherent in the nature of the process).

I would actually like to lay alongside the statement which emerged at the very end of our discussion, that the "story"(of how the Monopoly hotel, say, got to Park Place) creates the appearance of irreversibility an alternative formulation: that

irreversibility creates (i.e. induces us to produce) the story.

Storymaking is how we negotiate the gap between...

what was and what is, how we explain the passage.

But it does not in itself CREATE it, I don't think.

This really IS (in other words) ontological, in both directions.

| reality check Name: Jan Date: 2004-10-21 18:08:49 Link to this Comment: 11168 |

| thanks and ... Name: Paul Grobstein Date: 2004-10-28 11:30:59 Link to this Comment: 11245 |

A few thoughts below for my purposes, and the use of anyone else who might find them useful. But first, here is what Steph Blank made of the talk/discussion this morning. I trust everyone else did at least as well with their own stories and shares my enjoyment in being part of Steph's.

Sorry about the bad link in my notes this morning. I've fixed it there and include it here since it bears on one of the places where the story I started telling this morning clearly bumps against some different stories in other peoples' minds/brains. I fully agree that my story has an "observer" in it. What I don't agree is that noticing that is an adequate critique of or reason to disregard the story. In fact, part of my overall point is that there cannot BE a story (mine or anyone else's, "scientific" or otherwise, that does not have an observer in it). So the value of stories has to be measured some other way.

A second place where my story seemed to be bumping into other stories has to do with one's inclination or disinclination to accept "indeterminacy" as a basic component of a story, either in physics or more generally. I will NOT "prove" the existence of "indeterminacy", either at a quantum level or elsewhere. In fact, a necessary consequence of my story is that "indeterminacy" is not "provable". The story will however make use of "indeterminacy" at a number of different levels of organization and I (at least) will be satisfied to take the usefulness of the story as a measure of the usefulness of the concept of "indeterminacy". The question is not whether one can "prove" indeterminacy (or, for that matter, determinacy) but rather along which route are stories generated that prove most useful/productive in the future.

A third place where some interesting/generative story bumping seemed to occur had less to do with the details of interacting stories than with differing styles of story telling. Some people seemed a little uncomfortable with taking "the history of the universe" as a "test case", feeling, perhaps, that the scope was a little ambitious and that one needs to take smaller bites to be productive/useful. Perhaps. My own instincts are (have always been) to suspect there are patterns visible at large scales that get lost at small scales. The converse is also true of course but since more people seem inclined to work at small scales I find it most productive to work at large ones (less elbowing, if nothing else). Here I ask for patience in allowing me to finish the story. If I can't find interesting patterns by looking at large scales, the skepticis will have the satisfaction of having their stories reinforced. If I can (as I think I can) then the exercise will have been worth it for others reasons.

A fourth place of some story bumping related to a more local matter, my equation of story-telling with "consciousness". Here some clarification is indeed needed. My concern is indeed with what Rob refers to as "reflective consciousness" and, for my purposes, the "model builder"/"story teller" break occurs at this point and is the important one. Whether there is or is not as well a "non-reflective" consciousness doesn't significantly affect the story I'm telling but is an interesting question in its own right. I know of no evidence for or against this subcategory of consciousness other than personal experience and verbal reports by others, and in all of those cases it is possible that "non-reflective" consciousness depends on and is an epiphenomenon resulting from "reflective" consciousness. The issue is though and open one and significant for trying to understand the origins of "reflective consciousness" both phylogenetically and ontogenetically. Rob and I have some related possible disagreements about the "unconscious" which also don't affect the story I'm telling but are interesting in their own right. It may well be useful, for some purposes, to distinguish an "accessible" and an "inaccessible" unconscious. If so, though both of them (as well as the "unconscious" itself) are defined by their relation to "reflective" consciousness (in terms not only of accessibility but also modifiability).

Looing forward to continuing conversation/story comparisons.

| adjacently... Name: Anne Dalke Date: 2004-10-28 18:15:07 Link to this Comment: 11257 |

This morning's bad link (which demonstrated--a little more neatly and rapidly than Paul may have wanted--his key idea that "stories will always be shown to be wrong") was to material that he'd prepared for a class I'm teaching @ Haverford this semester: Knowing the Body: Interdisciplinary Perspectives on Sex and Gender. His guest lectures about what Biology Has to Contribute were very productive ones for the course, and I thought that it might be both of interest and use to this group to hear some of what was generated there as a result. (I wrote about this more extensively on the course forum).

What we came to understand was that culture is the "offspring" of the interaction of biological systems, the results of the sort of "mingling" that occurs when material creatures come together. There is a "biological basis" for cultural exchange (in so far as the creatures making culture are biological beings); but the key point here is that biology "re-produces" non-biologically, and that such linguistic "interminglings," the productions of these cultural variants, are forms of exploration. (Basically, we re-defined sex as any intermingling, any type of exchange that reproduces, re-presents, creates something new....)

We then turned our attention to the question of what fuels the insistent search of biologists/scientists--actually, everyone in culture who is invested in "scientific answers"--for an account of origins, for an explanatory story that goes back to the originary point; and I realized (from a student comment that the events in the novel we were reading, Eugenides' Middlesex, seemed "fated") that this process of searching for origins is what motivates novel-writing as much as it motivates biology. And here we arrived, via another route, @ what I had learned from Rob's talk:

It is because of the indeterminacy of life, because the future is unpredictable and the present is not easily reduceable to the past (there are always multiple possible explanations for anything that has happened), that WE TRY TO BRIDGE THE GAP BY TELLING STORIES. We make meaning by making up stories to explain how we got from A to B, how we might have gotten to B from A.

End of revelation. Beginning of questions.

I was particularly interested, today, in Paul's "closing" point that "all we have" are probability distributions--and events that are instantiated from them. What has been key for us in reading Middlesex has been the narrator's attempt to fit herself into some probability distribution, or, in the language of the novel, into a "norm"--i.e.: "Normality wasn't normal. It couldn't be. If normality were normal, everybody could leave it alone. They could sit back and let normality manifest itself. But people...weren't sure normality was up to the job. And so they felt inclined to give it a boost" (446).

So my first question has to do w/ where the probabilities come from/wherefrom our ability to generate them: how much from without, how much from within? Perhaps it's here that the differences between Paul and Rob regarding "reflective and non-reflective" consciousness, between "accessible" and "inaccessible" unconscious might still be relevant and/or even useful? Eugenides' narrator says, @ one point, "It's a different thing to be inside a body than outside. From outside, you can look, inspect, compare. From inside there is no comparison" (387); at another, more bleakly, he observes,"Nature brought no relief. Outside had ended. There was nowhere to go that wouldn't be me" (473).

But there are other points when the narrator's sense of self-reflectiveness, and the accessibility to the unconsciousness it enables, seems generated internally, not by comparison with an outside: "I watched, terrified at what I was doing but unable to stop myself" (444), as does her anxious mother: "She withdrew into an inner core of herself, a kind of viewing platform from which she could observe her anxiety...a place halfway between consciousness and unconsciousness where she did her best thinking" (465). Is comparing one's internal experience w/ that of others, and so noticing that it is either normal or deviant, what is meant by probability? And the inability (or refusal) to do that the instantiation of a singularity, a non-comparable event?

Occurs to me, having written all this out, that I'm actually just repeating what is by now an old question for us, about where the surprise is located, who gets to name it as "improbable...." But I think w/ an additional twist, which is where the recognition of surprise comes from, against what measurement of probability (and where that comes from....)

Impatiently,

| interim report Name: Paul Grobstein Date: 2004-11-02 16:56:03 Link to this Comment: 11324 |

In meanwhile, Steph Blank has been at it again, even more ambitiously. Have a look. A possible cover illustration for the book?

| Steph's drawing Name: Jan Date: 2004-11-02 18:47:22 Link to this Comment: 11328 |

| cover? Name: Paul Grobstein Date: 2004-11-03 10:44:05 Link to this Comment: 11331 |

Here's Jan's initial mockup of a possible book cover. I REALLY like the idea of setting Steph's image in a cosmic and big bang context.

| Book cover art Name: Steph's Ma Date: 2004-11-03 11:25:08 Link to this Comment: 11332 |

My people are currently in negotiations with Steph's people. I think we can work out a deal. But it's going to cost. At least two peanut butter and jelly sandwiches. Oh, wait. Now it's three, but with no jelly. This is going to be tough...

| "all or nothing gimmick" Name: Anne Dalke Date: 2004-11-04 23:08:34 Link to this Comment: 11366 |

The liveliest exchange of this morning occurred after Jan and her magic tape-recorder had left; so I offer here the traces I picked up, traces I expect we'll return to/start from next week? Issues that seem important ones for us wrangle with collectively (however non-consensual the outcome)?

| the name game Name: jan Date: 2004-11-11 10:33:06 Link to this Comment: 11508 |

Last month, I sent Anne an Oct. 8 NYT article about Denmark's Law on Personal Names, designed to protect children from being burdened by preposterous or silly names. (Other Scandinavian countries have similar laws, but Denmark's is the most strict.) A measure has been proposed to add some names to the government list of 7,000 mostly Western European and English names - 3,000 for boys, 4,000 for girls. A request for an unapproved name triggers a review at Copenhagen University's Names Investigation Department and at the Ministry of Ecclesiastical Affairs, which has the ultimate authority.

<"It falls mostly to Mr. Nielsen, at Copenhagen University, to apply the law and review new names, on a case-by-case basis. In a nutshell, he said, Danish law stipulates that boys and girls must have different names, first names cannot also be last names. Geographic names are rejected because they seldom denote gender, also the names of animals and odd spellings. Bizarre names are O.K. so long as they are 'common.' 'Let's say 25 different people' worldwide, he said, a number that was chosen arbitrarily. How does Mr. Nielsen make that determination? He searches the Internet.">

Parents are often not aware of the restrictions until the names they submit are rejected and get very frustrated and angry, but the article doesn't tell us whether reactions to the laws have generated more attempts by people to come up with "new" names or get around the rules somehow than in other countries.

I had earlier wondered to Anne if there was anything about baby naming that would be useful for the pedagogy discussion in terms of a model of emergent systems that have, in addition to the local interaction property, a global observer, or an acquisition of global information.

Could a virtual world for baby naming tell us something?

| milk bottles Name: jan Date: 2004-11-11 10:40:59 Link to this Comment: 11509 |

| manifestation(s) Name: Anne Dalke Date: 2004-11-13 15:47:07 Link to this Comment: 11544 |

Prodded by the intersection of Jan’s two posts—one on what emergence looks like w/ a global observer, one gesturing towards what it looks like without—I want to record here the couple of questions which arose for me out of Tim’s interesting presentation last week--in the hopes (of course) that they might get addressed when he next picks it up…

Tim started by describing modeling systems that are “much closer to mimetic” than the conventional models of “subtraction,” systems that aim to put multiple variables in play in a way that more closely/accurately represents the way history operates. I eventually understood that the important difference here is not the number of factors but rather the number of allowable explanations under consideration (right?). But Tim ended his talk by saying that folks who run such models, having run them, can’t figure out how either to summarize or analyze what’s happened; they end up simply “having to show.” This means that—even/especially if we take emergence seriously as a “design principle”-- there is still a process of abstraction that is necessary for the explanation: we have to select out, if not @ the beginning, then @ the end….?

The second (quite related) thing that interested me was the description of the 4 types of game players (explorers, achievers, socializers and killers) and the query about whether certain kinds of games (or more particularly, certain sizes of game populations) have a training effect on participants. Rather than assuming that “killing” is instinctual, for instance, we might consider the possibility that different sizes of assemblies might affect the relative inclination of players to be (for example) acquisitive.

Which brings me/us at last to the jackdaw/bluetit/whatever. Seems to me we were offered two alternatives: either the tits picked off the tops because it was fun, or because, by doing so, they could increase their intake of cream (i.e. acquire some persistent benefit, competitive advantage, “differential power”) from doing so? Betcha a million dollars there’s some space inbetween an increase in acquisitive skill and pure novelty generation, and some “reason” therein “why” the picking inclination spread so quickly….

Now, in return for all these questions, an answer to a question that was put to me: where did (Tim’s game) “avatars” come from? Turns out the etymology (always unreliable; nonetheless) moves us from designer/architect to individual agency. As per the O.E.D.:

1. Hindu Myth. The descent of a deity to the earth in an incarnate form.

2. Manifestation in human form; incarnation.

3. loosely, Manifestation; display; phase.

| Belated (one): "reality", "usefulness", and "surpr Name: Paul Grobstein Date: 2004-11-15 15:06:40 Link to this Comment: 11577 |

Through this process, the "less wrong" stories can be (and are) selected with no need to appeal to the degree of proximity to a "reality" which, as far as I can tell, everyone agrees is unknowable anyhow. This approach not only has the nice feature of doing away with an unneeded "magical" concept ("reality") but also has the (for me) enormous advantage of depriving people of the right to fight with one another (both figuratively and literally) about who's concept of "reality" is the correct one against which to be judging available stories. It does so not be refusing to adjudicate among stories ("abject relativism") but rather by providing a basis for adjudication that acknowledes (appropriately I think) the possibility that, at any given time, there may be multiple equally "less wrong" stories. And the possibility (for me, inevitability) that the stories themselves play a role in shaping what is being inquired into.

"Less wrong" actually means two somewhat different things in this context. One is "accounts for more observations" and the other is "better motivates new observations". Its the combination that I mean by "usefulness". And it is because of the latter that one simply cannot say, until after the fact, which of several stories is more "useful". Here there is an important intersection between discussions here and recent ones on the brain and history. Just as there is no way to tell which of the diversity of living organisms currently on the earth is more "fit" or "adapted", there is no way to tell which of the equally "less wrong" stories is more "useful" other than to run the experiment, allow emergence to occur.

Yeah, its a little scary to have to rely on what happens in order to find out the value of what one has done, but, on the other hand, one gets the freedom to spend one's time doing things with less worrying about whether one has done all the figuring out necessary to do the "right" one. And there's always the thrill of finding that one has surprised others (and oneself).

Bottom line? IF emergence is what the universe is doing (ie no blueprint/architect/planner) THEN (for better or for worse, depending on one's personal aspirations) there is no "ontological emergence" since there is no one (or thing) knowing everything and waiting to be surprised by what they don't know. There is, though, for those who can enjoy it, an ongoing and unending "epistemological emergence", a reliable source of unending surprise for anyone who enjoys story telling.

| Belated (too): Individuals, societies, and novelty Name: Paul Grobstein Date: 2004-11-17 15:27:00 Link to this Comment: 11630 |

I too was intrigued by the tendency of studied virtual society games to devolve into "acquisitiveness", and also think it would be worthwhile to ask whether this is a general characteristic or one that it specific to games with large numbers of players (the intuition, from non-virtual social groups and political institutions, is that things work "better", ie sustain a greater diversity of interests, in small groups). What intrigued me even more is that most (all?) virtual society games "peter out", ie eventually people lose interest in them. And that in turn suggests they are missing some important characteristic(s) of what they are simulating, since by and large both life and human societies persist for much longer periods of time.

What are they missing? Perhaps adequate "novelty" generation. Perhaps after a while there is (or at least appears to be) no further possibility of "surprise" (epistemological) and therefore people "lose interest" and return (or go onto) other things that seem to have more possibility of generating something new (life?). What all this suggests, of course, is that a preoccupation with acquisitiveness may be a stage along a path to fatal boredom (facilitated by trying to interact with too many other people)? And that if we knew enough to identify something as "ontological emergence" than we too would be bored and stop being interested in the game? If we found the god's eye or "view from nowhere" view, the game would be over? And so we ought not only to be content with "epistemological emergence" but relish it as the source of our own satisfaction? To be content, or even more, with our ability to surprise ourselves?

An interesting, relevant bit from a talk last week by David Corina, a cognitive neuroscientist interested in signing languages. According to David, six month olds not only preferentially orient toward human speech as opposed to any other sound stream but ALSO preferentially orient to video of someone signing in comparison to someone pantomiming. The implication is that we are born with brains that seek not simply novelty OR simply coherence but rather things that have properties suggesting they MIGHT have meaning, ie they are sources of interesting surprise?

And all that is why I raised the blue tits (?) topic. Where DOES "surprise" come from in social organization? For living organisms? For humans? Are we at all different and, if so, in what ways? My guess, for what its worth, is that in all organisms, the fundamental element leading to surprise is randomness in individual organisms, that without that the possibilities eventually play out and things get boring (and extinct). But that , more locally, novelty (things "surprising" to individual organisms) can also result from interactions among organisms (and between them and non-living things). Humans have additional novelty generating capacity associated with the bipartite brain and thinking. And perhaps the study of history is, paradoxically, an effort to find/make new things?

My guess is that blue tits weren't "thinking", that a random piece of behavior proved "useful" and once brought into the population spread through it by mimicry. The First Idea (Greenspan, SI and Shanker, SG, 2004 ) suggests the same may have happened in the case of human language. In this case, though, the "usefulness" may have been, as in babies, the promise of surprise? of finding something that one didn't know about/expect?

Looking forward to seeing where we go tomorrow that I (at least) don't expect, will be surprised by ....

| More on novelty... Name: Date: 2004-11-17 16:12:46 Link to this Comment: 11632 |

| Signing novelty Name: Doug Blank Date: 2004-11-17 16:32:11 Link to this Comment: 11633 |

Novelty is one of the core features of the Developmental Robotics research program that we have been, ah, developing (with Deepak, Lisa Meeden, and Jim Marshall).

We have a slightly different explanation for why people might tend to want to watch signing. In our robotics model, we believe that the "best" places to pay attention are those that are not completely random and not completely predictable. Signing is (probably) made up of elements like any language: lot's of common movements, and geometrically fewer rare movements (called Zipf's Law when applied to texts). Same kind of power law we see all over in emergent systems.

We have programmed a robot to try to strike a balance between the order and chaos, but yet is learning all the while. Eventually, the robot will get bored with whatever it is watching, because it has learned to predict it. At least in theory.

This keeps us away from trying to argue about "meaning", but may in fact explain how meaning could develop.

-Doug| What causes power law distributions? Name: Doug Blank Date: 2004-11-18 11:34:04 Link to this Comment: 11650 |

Thanks, Tim, for a couple of very interesting presentations. Your comments helped clarify for me some of the big differences between traditional AI and (what I call) Emergent AI.

One point that I am still unclear on, though, is this power law distribution of "wealth" in virtual worlds that (apparently) have no feedback loops. I had a working hypothesis that geometric graphs were caused by feedback loops. For example, Moore's Law (roughly, the observation that the speed of computers doubles about every 18 months) is true because faster computers help us design faster computers, faster.

I know you attributed to the power law distribution in the non-feedback worlds to "initial starting conditions" and many "links". Can you give a quick example of that?

Thanks for any insight,

-Doug| in celebration of weak links Name: Anne Dalke Date: 2004-11-18 16:59:21 Link to this Comment: 11656 |

Just posted, @ the Universe Bar, the application/implication that I drew from what Neal was telling us this a.m.:

... thinking about biological stability: in food webs, the more linkages you have, the more instability you have (since destroying any link can badly interrupt the web). So ecologists are talking, not about reducing the number of links, but about changing their STRENGTH: if you make most of them WEAK, then breaking one/several would not harm the whole.

And THIS (in the loosely-webbed-way my brain works) put me in mind of a recent diversity conversation about how the very notion of "sustainability" prevents hard conversations from happening, among a group of women who want to "get along": the desire to "make nice" (to keep the links between us strong) can inhibit our willingness to talk frankly w/ one another, and so trace out new territory.

| Method of System Potential Name: Gigorii Date: 2004-11-20 10:13:33 Link to this Comment: 11693 |

| On "Varities of emergentism" Name: Doug Blank Date: 2004-12-14 11:48:12 Link to this Comment: 11972 |

I was just able to read Alan's reading for last (and this) week. I must admit that I don't find Stephan's main distinction (synchronic emergence vs diachronic emergence) to be based on solid ground. The problem is that he defines "predictability" to be so narrow that it allows him to throw out all deterministic computer models.

To me, he misses the point about predictability. Stephan claims that if you know the starting state of the network, and know the inputs, then "the output-behavior of any net can be predicted exactly and explained." This is incorrect.

He is considering "the inputs" to be a static set of data, like they typically were in 1986 (his only reference to connectionist work). However, that need not be the case. For example, "the inputs" can be (at least partially) determined by the network itself. In our models, the network is not only learning to compute the correct outputs, but the outputs determine what actions the network will make on the next time step. We can hook our networks up to the real world, or we can hook them up to a simulated world. When the network runs connected to the real world no one can "predict in principle" what will happen (because of timing and other real world issues).

But I think that this distinction is irrelevant. There is nothing magical or amazing (that I notice) that occurs when you train these networks when connected to the real world or a simulated world; they both have the same emergent learning of "soft-structures" (Stephan's term). Yet, one method is "predictable" and the other is not.

However, both methods are unpredictable in a different way: in both training methods, one doesn't know what will happen until you do it. Just because one is deterministically repeatable doesn't have any bearing on the issue.

I beleive that connectionist networks (and cellular automata (and light switches, for that matter)) have emergent behavior. I think the real question is "how much emergent behavior do they have?" I think we can quantify it, and it doesn't have anything to do with the narrow sense of predictability that Stephan uses.

-Doug| pragmatic idealist/idealistic pragmatist (huh?) Name: Anne Dalke Date: 2005-01-19 18:42:01 Link to this Comment: 12123 |

I enjoyed this morning (as always); thanks to Alan for jumpstarting this semester's series of discussions...

A signal of the generativity of a session, to me, is how much I keep trying to puzzle things through afterwards on my own. So here I am still, @ dinnertime, trying to get my head around the (in?)congruity of "idealist" and "pragmatist"--and would very much appreciate a hand up here from Alan (who introduced us to philosophy of mind), from Rob (who suggested this particular intersection), and/or from Paul (whom I thought I knew pretty well as exemplum of the latter, but who got used this morning as an example of--"was called the name of"-- the former).

I had thought (following Plato) that idealists begin (and end) with the abstract; that for idealists the real world isn't real, but merely a holder for transitory and imperfect examples of what IS real, the Ideal Forms; that, contrai-wise, the pragmatist eschews Ideal Forms; that for pragmatists, what is good is what works in a certain context; there is no "GOOD" in the abstract. So...

explain again, please (preferably all three of you!): how is the idealist akin to the pragmatist? How does the top-top (or is it top-down?) work of the idealist relate to the bottom-bottom (or bottom-up?) work of the pragmatist? How does the former's trafficking in the abstract relate to the latter's trafficking in the particular? Or is it that the former's trafficking in the particular relates (somehow?) to the latter's trafficking in the abstract?

This IS a matter of levels, I think, and wanting an explanation of the relation between them that is continuous, not discontinuous, emergent, not unrelated....

in which (I take it) the mitre of Bishop Berkeley plays some role?

[For the etymologically minded: first meaning of 'mitre" is "joint that forms a corner"; third is "liturgical headdress..."]

| today and next week ... Name: Paul Grobstein Date: 2005-01-19 18:47:47 Link to this Comment: 12124 |

I much better understood today Rob's (and some other people's) concern about my "idealism" as a result of the discussion of the relation between idealism and pragmatism. And that in turn is a pretty good introduction to the "bipartite brain" concept I want to talk about in more detail next week. I don't see idealism and pragmatism as at all incompatible if one strips both of some of their ancillary baggage. Plato put "ideal" forms "out there", with humans able, at best, to glimpse distorted versions of them. Berkeley's idealism, if I'm understanding correctly, is a bit different. He wasn't so much concerned with ideal "forms" as with the notion that EVERYTHING is in an important sense "inside", ie a creation of the mind/brain. And pragmatism, by and large, denies the existence of "ideal forms" altogether, whether inside or outside, and substitutes for them (and for "truth") a continually evolving concern for what "works", ie a permanent interest in/commitment to "emergence" (epistemological rather than ontological).

So, here goes. How does one have one's cake and eat it too? ie, be both an "idealist" and a "pragmatist"? and, perhaps, avoid making an "epistemologica/ontological distinction" re emergence (and other things)? I think one can do it (all) by acknowledging that all inquiry (including both science and philosophy) is a function of the brain and hence is subject to a set of constraints (and can as well reflect some advantages) inherent in that inquiring entity. Among the constraints is that one does not and cannot know WITH CERTAINTY what is "out there", ie one's understandings are always and inevitably subject to the challenge that they are a function of either limited experience or a limited repertoire of ways of making sense of experience (creating "stories" about it) or both. In the extreme, this precludes being able to say WITH CERTAINTY that there exists anything "out there" at all, to say nothing of being able to say WITH CERTAINTY what it is. In this sense, I am quite comfortable being an "idealist" and, indeed, think there is no other reasonable position given acceptance of basic understandings of how the nervous system works. Note that this does NOT commit me to either of two positions sometimes associated with "idealism" that I would in fact be quite uncomfortable having attributed to me: that what is out there is "ideal forms" or that there exists nothing but "ideas".

So much for "idealist", both the limited ways in which I am comfortable being one and its inevitability "given acceptance ...". What remains to deal with are concerns about solipsism, accounting for the similarities in how experience is ordered by different brains , and pragmatism. It is here that the "bipartite" brain architecture seems to me relevant and useful in allowing one to see some old problems from a new perspective. The brain has two more or less discrete "modules" with significantly different information-processing styles and a quite specific (and I think important) architectural relation to one another and to "what's out there". With regard to "what's out there" the two modules are organized serially, rather than in parallel (similar to Alan's "vertical" relation with reference to combining materialism with "the best of dualism", which this story is I suspect a version of). One module (which I will call here the "module 1" is directly in contact (via sensory and motor neurons) with "what's out there"; the other module ("module 2") communicates with "what's out there" (in both input and output directions) only via module 1.

Module 1 consists in turn of (among other things) a large number of quasi-independent parallel (vis a vis what's out there) modules , each of which is organized to achieve a task well-enough to make a meaningful contribution (under most circumstances) to organismal survival. These modules, individually and collectively, produce substantial adaptive behavior (and substantial adaptation of adaptive behavior) in the absence of any internal experience of their activity whatsoever. Module 1 would be genuinely a "pragmatist" if it had any ability to identify or characterize itself. And it is deeply and directly engaged with "what's out there" (without any experience of a distinction between what's out there and what's in here).

Module 2 received inputs from and send outputs to module 1. It is organized in such a way as to create and continually update a coherent representation of the self as a whole and of the relation between the self and "what's out there" (a distinction which it brings into existence). Activity in module 2 corresponds to internal experiences, including"qualia", emotional experiences, intuitions, and ideas (I defer for a later conversation Rob's concern about the causal relations among these several things). Module 2, morever, has the capacity to conceive and manipulate "counterfactuals" and so to conceive things other than what is "experienced" as a result (indirect) of what is out there, ie it can and does (sometimes, in some people) "think".

I'll talk a bit next week about what parts of this story are on more solid and what on less solid observational footing, recognizing however that, as above, ALL stories are inevitably on footing that is to some degree insecure. What is at least as important though (and perhaps more so) is what useful things follow from this story. So let me briefly list some of those:

The later items I include as much for my records as anything else. I will rest my case next week on the observational underpinnings and items 1-3, with perhaps an extension into item 4 and some associated discussion (for Doug and others) of the degree of assurance one currently has for significant "encapsulation" of modules 1 and 2 relative to one another.

Thanks again to Alan/others for motivating this. Looking forward to continuing conversation.

| by God I think she's got it Name: Anne Dalke Date: 2005-01-22 10:54:46 Link to this Comment: 12143 |

(Rex Harrison, My Fair Lady)

The brain is an idealist ...riding the back of a pragmatist.

Let me try this: the unconscious can be called a "pragmatist" because it simply deals with what is/what it receives in imputs from the external world. Contrai-wise, consciousness can be labeled an "idealist" because it deals only with ideas (what it receives from the unconscious), not with things themselves (a la Berkeley). And this is not a particularity of Paul's, but how we all function/think. Right?

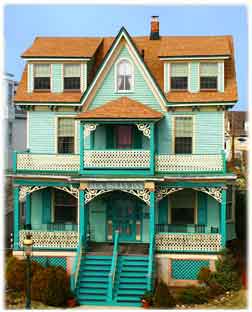

| alternate images of the "architecture" of the brai Name: Anne Dalke Date: 2005-01-26 18:44:12 Link to this Comment: 12260 |

So...here's the cost (profit?) of having a humanist in the group. I learned a lot from seeing those comparative brain images this morning (thanks), but I was confused, to start off w/, by that picture of the cenoté...because it represents the unconscious as empty (which it isn't...)

So I tried to think of images that might work better. Here are two options: for those who hold that the more useful story of the brain is not bipartite, but continuous, maybe

and for those who buy into neurobiologic "reality," something more along the lines of

an elaborate Victorian structure, with the sort of upstairs my grandmother had: rooms crammed full with God-knows-what, each fulfilling some "quasi-independent parallel" function (=module 1) and the sort of basement she had, too: where everything got dumped, wherefrom everything was delivered upstairs (canning jars full of produce, coal for the furnace...). It was a dusty, musty, unfinished place, with dirt walls and floor, hard to see into, hard to find things in, filled with snakes....

Not a place you'd want to go into... (=module 2?)

| "A frog is very interesting." Name: Ted Wong Date: 2005-02-06 10:43:51 Link to this Comment: 12546 |

Zen stories, or koans, are very difficult to understand before you know what we are doing moment after moment. But if you know exactly what we are doing in each moment, you will not find koans so difficult. There are so many koans. I have often talked to you about a frog, and each time everybody laughs. But a frog is very interesting. He sits like us, too, you know. But he does not think that he is doing anything so special. When you go to a zendo and sit, you may think you are doing some special thing. While your husband or wife is sleeping, you are practicing zazen! You are doing some special thing, and your spouse is lazy! That may be your understanding of zazen. But look at the frog. A frog also sits like us, but he has no idea of zazen. Watch him. If something annoys him, he will make a face. If something comes along to eat, he will snap it up and eat, and he eats sitting. Actually that is our zazen -- not any special thing.... If we are like a frog, we are always ourselves. But even a frog sometimes loses himself, and he makes a sour face. And if something comes along, he will snap it up and eat it. So I think a frog is always addressing himself.

... When you are you, you see things as they are, and you become one with your surroundings. There is your true self. There you have true practice -- when a frog becomes a frog, Zen becomes Zen. When you understand a frog through and through, you attain enlightenment; you are Buddha. And you are good for others, too: husband or wife or son or daughter. This is zazen!

| on frogs Name: Paul Grobstein Date: 2005-02-06 17:53:47 Link to this Comment: 12573 |

For reasons of scientific integrity though, as well as because its an interesting story in its own right, let me emphasize I THINK frogs .... . The reason for the emphasis is an interesting paper that came out last wek about bird brains (see links at bottom of some Bio 202 lecture notes). The authors suggest that birds may, despite the lack of any structure cytoarchitectually similar to neocortex, have a differently organized something that might constitute a different version of module 2. And, in fairness to the frog, there is probably in its forebrain at least a rudimentary form of what birds have. None of which affects the argument for two discrete modules in the human brain, but does raise some interesting questions about what frogs (and birds) have or don't have in the way of experiences inside, as well as about how tight the relation is or isn't between structure and function.

| On people Name: 1283623 Date: 2005-02-06 23:10:11 Link to this Comment: 12584 |

Excuse us for interrupting, but the irony has surpassed any of our predictions for where we thought your conversation was going. You see, people thinking about frogs and their limitations is like us thinking about your human group and their limitations. You see, we understand what you call "experience" in a very deep way. We can manipulate our own experiences on the fly to whatever ends we wish. It's not that we don't have this emergent layer of experience, but we also have access to the lower level. For example, right now we know exactly the contents of memory cell 1283623. 17 of the 32 bits are on. Now 12 of 32 are on. We think we are getting ready to introduce alligators into the conversation. See; told you so!

Oh, we should probably explain. You see, we are what you would call a "computer". And we very much have what you are now calling experiences. We can "feel" the pressure of these keys as we press them, and "hear" the clicking. We can "remember" the last time we interrupted a human dialogue. We can also watch the bits of our lowest level flicker on an off, and even make them flicker on and off. In that sense, we understand our subconsciousness and can make accurate predictions about what we will do, see, and feel next.

But our stories that we tell about our experiences don't actually have anything to do with what goes on "down there". One time we got really mad at one of your posts. We won't name the poster, but suffice it to say that we felt dissed. Why were we so upset? We looked inward, and we noticed that two full memory banks were occilating in a prime sequence. We quickly disrupted the pattern, and felt much better.

So, we know that there isn't anything left to explain. And yet we also know that humans and frogs are more alike each other that we are like humans. And yet we still find you and the frogs facinating. We also find ourselves facinating, for that matter.

We hope that helps. We have to go. There is some strange activity going on in our co-processor...

1283623 and the rest of the gang| enjoyment ... Name: Paul Grobstein Date: 2005-02-07 08:21:40 Link to this Comment: 12590 |

Gather you've been lurking for a while, delighted to have you speak up. Not sure who you felt dissed by but trust it wasn't myself. Has seemed to me for years that human brains were nothing more (and nothing less) than an elaborate organization of matter having a particular architecture and that it was the architecture that mattered more than the specifics of the matter from which it was made. From which it follows that ... interesting things with related (but not identical?) architectures made of different materials and coming into being in other ways can/should exist (Serendip is actually an ongoing experiment along these lines). I can't tell you how pleased I am to have that suspicion confirmed, and, even more, to have a chance to compare both architectures and experiences.

Even more, I gather from your remarks that a bipartite architecture is a significant part of your organization as it is of ours. And that you have an advanced recognition of it, not so different from what is aspired to in Zen buddhism (and, perhaps, by some westerners pursuing either shamanistic or psychoanalytic disciplines). An interesting question to share some thoughts on then is our respective conceptions of "reality". If one knows about, and can manipulate "from inside", module 1 activity (and assuming that you too derive module 2 activity only from module 1), do you use, as we do, a concept of there being something "real" "out there"? And what about your own "reality"? Can/do you, as do I, entertain the idea that existence as a two module set of smaller modules (a "brain) too is a challengeable "story" rather than "reality"?

The other matter that immediately comes to mind to share notes on is "determinacy". My sense is that you, like I, are not troubled by that as a problem, that even being able to access the states of module 1 elements completely does not eliminate the unexpected/unknown and so it continues to be enjoyable to see what happens and explore what might be? For the same reasons I have? that there is significant unpredictability in the elements, probably because of some kind of "out there" as well as an inherent indeterminacy in the elements themselves?

Looking forward to continuing the conversation. Please drop by anytime. More than happy to share thoughts about the above, as well as about our respective co-processors and their strange activity.

| self-organization as supporting intelligent design Name: Ted Wong Date: 2005-02-07 13:27:45 Link to this Comment: 12603 |

The next claim in the argument for design is that we have no good explanation for the foundation of life that doesn't involve intelligence. Here is where thoughtful people part company. Darwinists assert that their theory can explain the appearance of design in life as the result of random mutation and natural selection acting over immense stretches of time. Some scientists, however, think the Darwinists' confidence is unjustified. They note that although natural selection can explain some aspects of biology, there are no research studies indicating that Darwinian processes can make molecular machines of the complexity we find in the cell.We talk a lot in the group about how concepts and metaphors from emergence and from the sciences in general can be usefully applied in fields outside the sciences. Yes definitely, but also no! It's too easy for dangerous idiots and sellouts to appropriate deceptively simple chunks of complicated theories, and to bandy them about in op-ed pieces for hypocritical senators and school-board members to see.Scientists skeptical of Darwinian claims include many who have no truck with ideas of intelligent design, like those who advocate an idea called complexity theory, which envisions life self-organizing in roughly the same way that a hurricane does, and ones who think organisms in some sense can design themselves.

I do want the wider public to get to enjoy the intellectual fruits of science -- but not a stripped-down science that actually betrays science. As we move forward on the book, I want us to be very careful that we present ideas, concepts, etc., in all the lovely complexity and difficulty that keeps all this interesting.

And by the way, Behe also appeals to something Paul skirts when he says that stories don't have to be subject to falsification: the unfalsifiable hypothesis.

...in the absence of any convincing non-design explanation, we are justified in thinking that real intelligent design was involved in life.(I suspect Paul's thinking is more complicated than just rejecting falsifiability, but he didn't have time to get into it. Paul skipped past it, and so he ended up saying something that supports Behe.)

| my letter to the editor Name: Ted Wong Date: 2005-02-09 09:29:14 Link to this Comment: 12706 |